GPT-4.1 vs Claude 3.7 vs Google Gemini 2.5 Pro: AI Coding Showdown for Vibe Coders

AI coding assistants are evolving at a breakneck pace. OpenAI's GPT-4.1 just arrived with significant upgrades, and it's squaring off against Anthropic's Claude 3.7 Sonnet and Google's Gemini 2.5 Pro – two other cutting-edge models. These models promise better code generation, the ability to juggle massive projects in memory, and more accessible pricing. This post will introduce GPT-4.1 and compare it to Claude 3.7 and Gemini 2.5 Pro across three key areas: coding capabilities, context length, and API pricing. We'll also explore what these advances mean for "vibe coding" – the emerging workflow where you program by prompting an AI partner rather than writing every line of code.

Coding Capabilities

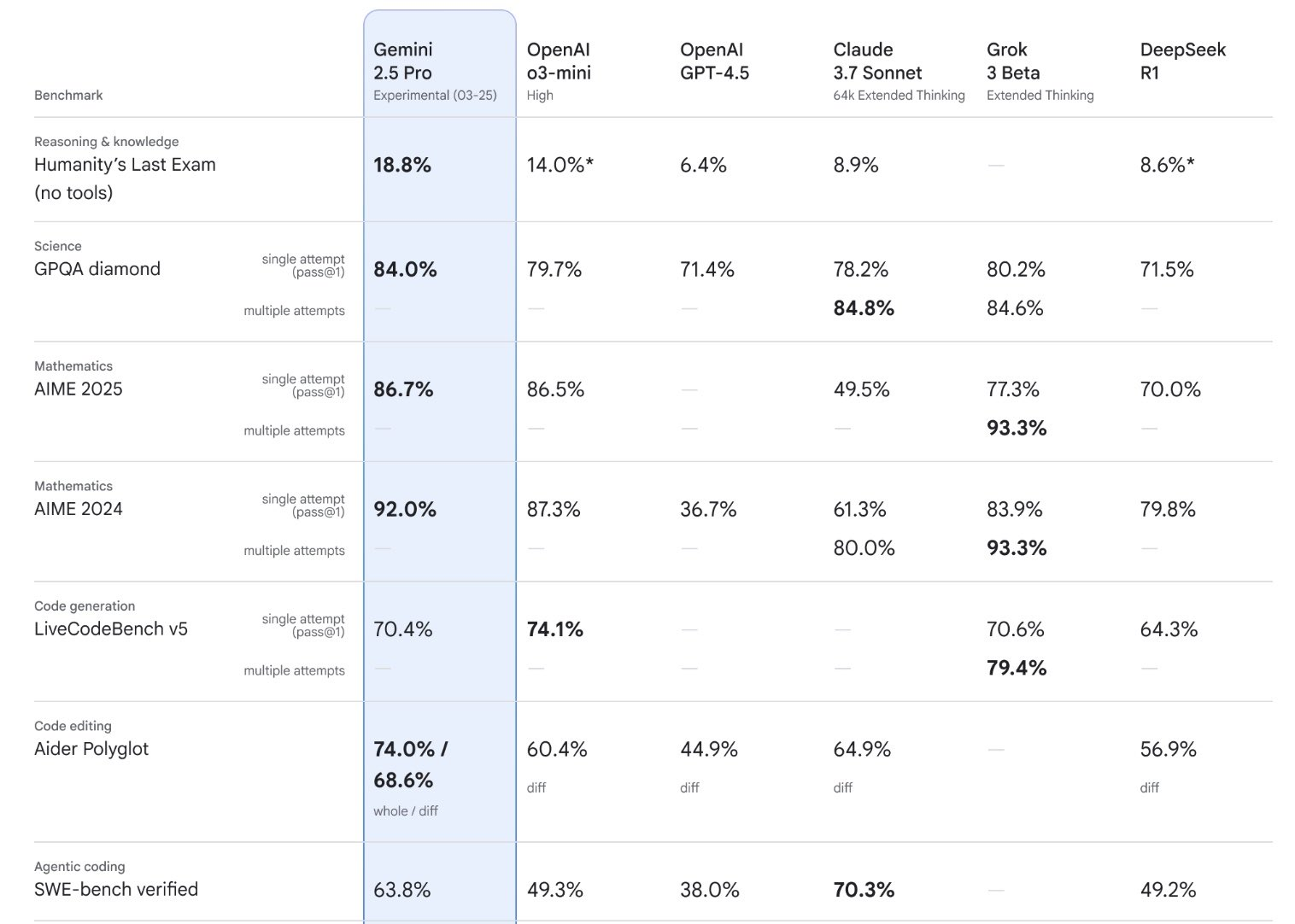

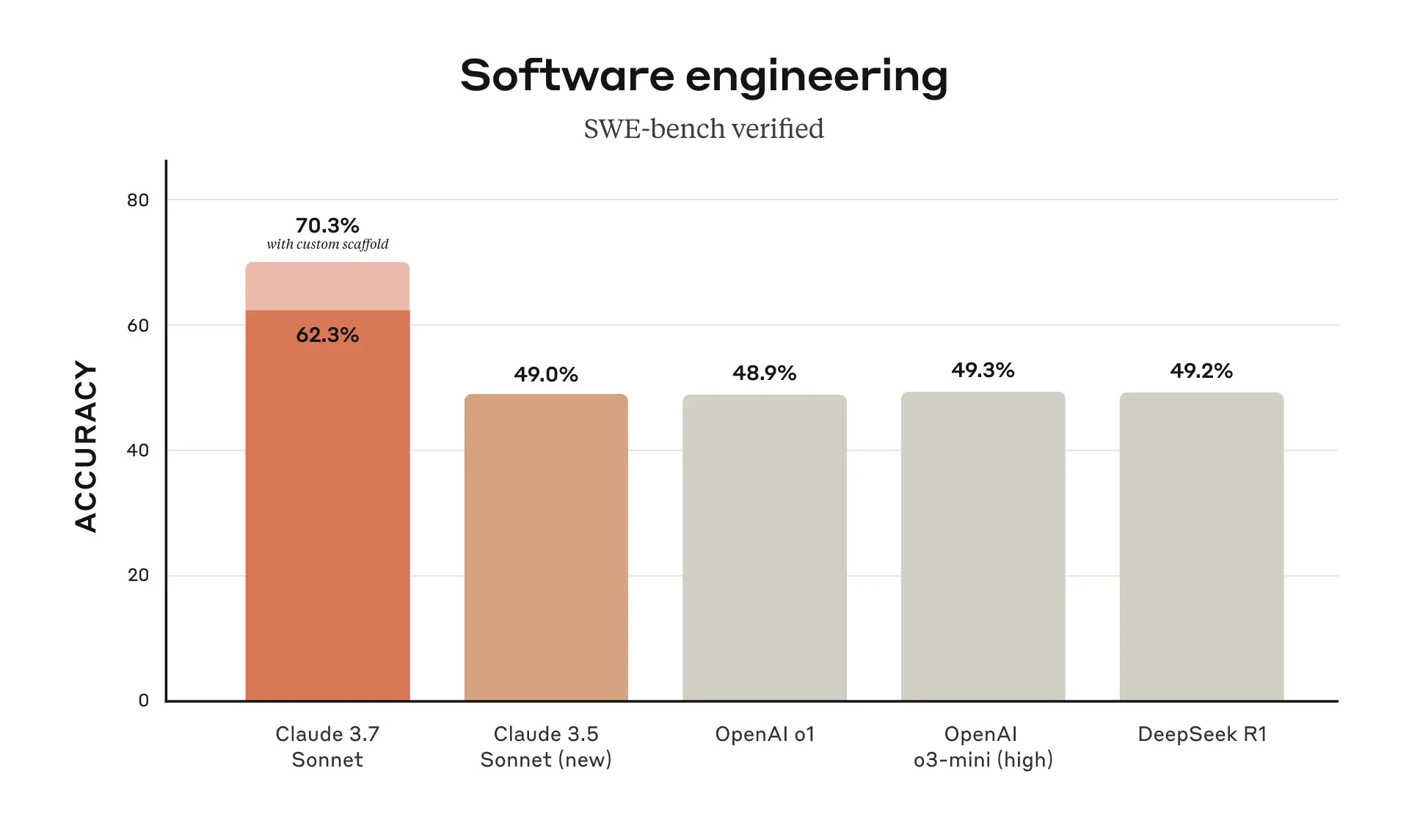

When it comes to generating and understanding code, all three models are extremely powerful – but each has its strengths. On a popular benchmark measuring real-world coding tasks (called SWE-bench), Google's Gemini 2.5 Pro currently edges out the others with a top accuracy around 63.8%. In practical terms, testers have seen Gemini produce complex programs in one go – for example, a working flight simulator and a 3D Rubik's Cube solver from just a prompt.

Anthropic's Claude 3.7 Sonnet is not far behind, scoring about 62.3% on the same benchmark (and even around 70% with special prompt techniques). Claude has been praised in early testing as "best-in-class for real-world coding tasks," excelling at handling complex codebases and planning code.

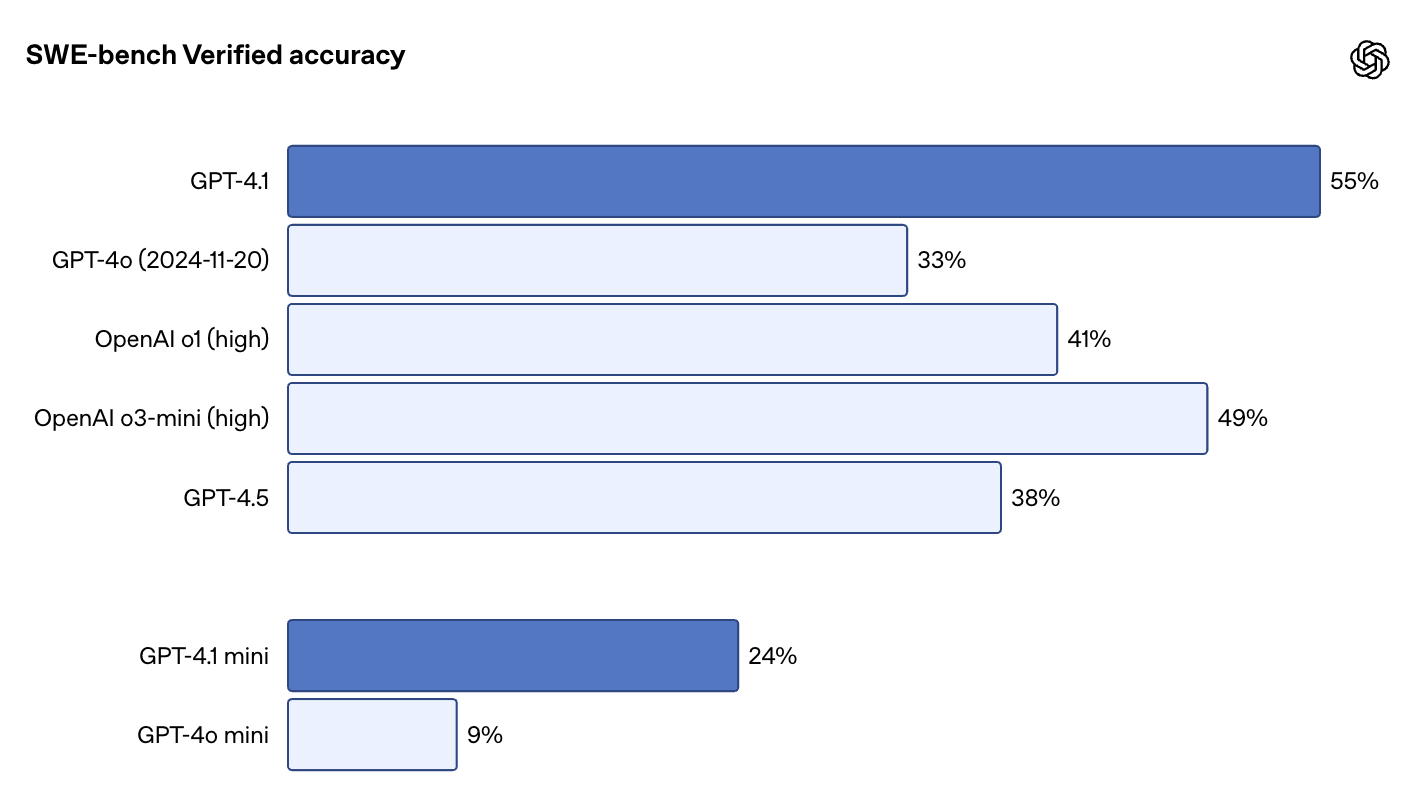

OpenAI's GPT-4.1, while slightly trailing in raw benchmark numbers (~54–55% on SWE-bench), was designed with extensive developer feedback. OpenAI optimized GPT-4.1 for reliability and format adherence, particularly in front-end web development scenarios. In practice that means GPT-4.1 tends to produce clean, well-structured code with fewer formatting errors – a huge plus when you're iterating on a creative coding project.

Debugging and reasoning. Pure coding accuracy isn't everything.

Claude 3.7 offers a unique "Thinking Mode" that lets it walk through its reasoning step-by-step, which can be invaluable for debugging and complex problem-solving. When turned on, Claude will literally show you its chain of thought as it analyzes code, helping pinpoint bugs or explain tricky logic. This transparency feels like pair programming with an AI that narrates its thought process.

Gemini 2.5 Pro, on the other hand, has strong reasoning abilities baked in (Google calls these "thinking" models) and tends to suggest solid fixes by analyzing context, as reflected in its high coding benchmark scores. It doesn't expose its reasoning step-by-step to the user, but it often catches errors across different programming scenarios with ease.

GPT-4.1 leverages its excellent instruction-following skills for debugging. If you clearly describe an issue or provide an error log, GPT-4.1 will diligently go through the code to find the problem and correct it. Thanks to its large memory (context window), it can consider the full code and error context at once, reducing oversights. In short, Claude's visible reasoning can make it feel like a collaborative tutor in debugging, while Gemini and GPT-4.1 act as super-smart auto-correctors for code bugs.

It's worth noting that all three models support not just writing code but understanding existing code. For example, GPT-4.1 can ingest an entire codebase (more on its huge context size below) and answer questions about how different parts work together. Claude 3.7 with its focused reasoning can explain code snippets or architectures in a very human-like way, which is great for learning and onboarding. And Gemini's advanced analysis, even across different data types (text, diagrams, etc.), makes it capable of integrating context like reading a UI design image and then generating the corresponding code. All three are generalist coders (many languages and domains), so whether you're scripting a Python data visualization or debugging a JavaScript web app, you'll find capable help. The differences lie in style: GPT-4.1 is like the meticulous senior developer, Claude is the thoughtful problem-solver who explains everything, and Gemini is the savant that delivers flashy results across the board.

Model Comparison Table

For a quick overview, here's a side-by-side comparison of key features and trade-offs:

| Feature | OpenAI GPT-4.1 | Anthropic Claude 3.7 | Google Gemini 2.5 Pro |

|---|---|---|---|

| Coding Performance | Excellent on code; ~54.6% on SWE-bench (improved reliability and format adherence) | Excellent on code; ~62.3% on SWE-bench (state-of-art with reasoning mode, very few errors) | Outstanding on code; ~63.8% on SWE-bench (best-in-class accuracy, handles complex projects) |

| Max Context Window | 1,047,576 tokens (~1M tokens, ~750k words) | 200,000 tokens (large, but ~1/5 of GPT-4.1/Gemini) | 1,048,576 tokens (1M tokens; ultra-long context, 2M planned) |

| Multimodal Support | Text + Images | Text + Images | Text + Images, Audio, Video (fully multimodal input) |

| Notable Features | Optimized for front-end and web dev; robust instruction-following; API only (not in ChatGPT UI) | "Extended Thinking" mode for step-by-step reasoning; Claude Code tool for CLI coding automation | Integrated chain-of-thought reasoning; excels at complex tasks; currently free preview (with rate limits) |

| API Pricing (per 1M tokens) | $2 input, $8 output (50% off in batch; 75% off cached) | $3 input, $15 output (90% off cached; 50% off batch) | $1.25 input, $10 output (for ≤200k-token prompts); $2.50 input, $15 output (>200k) |

Table Notes: All models support multiple programming languages and tasks beyond coding (GPT-4.1 and Claude, for instance, are also great at writing and Q&A). "SWE-bench" refers to a software engineering benchmark for code generation – higher is better. Context window counts both prompt and code, e.g. 1M tokens ≈ 750k words of input. Pricing is as of April 2025 for API usage; certain consumer apps or subscriptions may differ.

Implications for Vibe Coding Workflows

So what do these developments mean for vibe coders – those of us who "let the AI do the heavy lifting" while we guide it with high-level prompts? In short, coding with AI is becoming more fluid, powerful, and accessible than ever. Here are a few ways GPT-4.1, Claude 3.7, and Gemini 2.5 can elevate your creative coding workflow:

- Rapid Prototyping: The improved coding abilities mean you can build functional prototypes faster and with less fuss. In vibe coding, you might start by saying "Create a simple game where a character jumps over obstacles" – and these models can output a runnable game code on the first try or with minimal tweaks. Gemini has demonstrated it can sometimes produce a complex demo in one shot, and GPT-4.1's focus on clean output means fewer cycles fixing syntax or format issues. This lets you quickly experiment with ideas. You can generate a prototype, run it, see how it feels, and then refine the concept in a matter of minutes. The upshot is an explosion of creativity and iteration speed. You spend more time exploring ideas and less time wrestling with boilerplate code.

- Iterative Development & Pair Programming: With huge context windows, you can have an ongoing conversation with the AI that spans the entire development of your project. This is a game-changer for pair programming dynamics. The model can remember every decision, every change, and even why you made those changes. For example, you can feed in an entire codebase or your previous conversation and say, "Now refactor the data layer for better performance", and GPT-4.1 or Gemini will know exactly what you're referring to. It's like working with a partner that never forgets any detail. Claude's Thinking Mode adds another dimension – you can literally watch the AI think through a problem step by step, almost like watching an expert developer debug or plan a feature. This not only helps get the solution right, it also teaches you in the process. Vibe coding with Claude can feel like an interactive lesson, while with GPT-4.1 and Gemini it feels like a seamless collaboration where the AI just "gets it" after you explain what you need.

- Creative Experimentation: All three models enable more people to create with code without getting bogged down in syntax. This opens the door for artists, designers, and other non-traditional programmers to use code as a medium. Since you can prompt in plain English ("I want a visualization of swirling galaxies that reacts to music"), the technical barriers are lower. Gemini's multimodal skills even let you mix media – imagine giving it an image as inspiration for a generative art piece, or an audio clip and asking for code to visualize the sound. These were sci-fi ideas a couple years ago; now they're plausible workflows. With vibe coding, you can "just see stuff, say stuff, run stuff" and it mostly works. The new models amplify this effect: more context means the AI can incorporate more of your creative input (be it text, code, or reference material), and better coding ability means it can handle ambitious ideas (like a physics simulation or an interactive story) that previously would have required an expert coder.

- Less Boilerplate, More Ideation: The models are getting better at following instructions and handling the dull parts of programming. GPT-4.1, for instance, is tuned to adhere to formatting and style guidelines – you can tell it "use functional components and Tailwind CSS for the React code" and it will oblige. This means as a developer you focus on the high-level design and let the AI fill in the boilerplate or repetitive patterns. Want to try three different UI color schemes or algorithm approaches? Just ask the AI to generate each variant. This swarm-of-suggestions approach was always a part of vibe coding, but now it's faster and cheaper to do. You could have GPT-4.1 write five different implementations of a function and then pick the best parts (a bit like having an intern write drafts that you refine). Claude's precision and "superior design taste" noted by some evals might even give you surprisingly polished outputs to choose from. In all, you spend more time in the creative or decision-making zone, and less in the grind of typing syntax.

- Cost and Accessibility: The downward trend in pricing (and free availability of models like Gemini in beta) means vibe coding is more accessible to indie developers and hobbyists. A year ago, running long coding sessions on GPT-4 could rack up significant costs; now you can do a lot more for a few pennies. This encourages experimentation. You might be willing to let an AI generate a whole project structure or multiple trials of a feature without worrying about token limits. Also, having multiple providers (OpenAI, Anthropic, Google) increases the chance you'll find a model that fits your budget or is integrated into tools you use. The competition is driving a "buyer's market" for AI coding assistants, which bodes well for the future of vibe coding.

Conclusion

The advent of GPT-4.1, Claude 3.7, and Gemini 2.5 Pro marks an exciting milestone in AI-assisted development. Each model pushes the envelope in its own way – GPT-4.1 with its enormous context and developer-friendly outputs, Claude 3.7 with its introspective reasoning and coding finesse, and Gemini 2.5 Pro with its sheer performance and multimodal prowess. For vibe coders, this means our AI collaborators are more capable than ever: they can handle bigger projects, adapt to our instructions more closely, and do it all at lower cost. Coding becomes less about wrestling with syntax and more about shaping ideas together with an AI – in other words, more vibes, less hassle.

In the end, choosing the "best" model might come down to your specific workflow. The fact that we have three such advanced AI coding assistants (and more on the horizon) is a win for the developer community. It means no matter your style – whether you're brainstorming a game, grinding out a web app, or just playing with code for fun – there's an AI out there ready to vibe with you and turn your ideas into reality. Happy coding!